Industrial data analysis case studies, effectiveness

System integrator case studies show the benefits of in-depth data analysis.

Learning Objectives

- Understand what two case studies can teach about industrial data analysis.

- Compare and contrast differences in a large-scale, multi-plant data analytics project and a local process control data analytics effort.

- Learn how data analytics can help fill the skills gap.

Industrial data analysis insights

- A pair of case studies provide instructive examples about industrial data analysis. A large-scale, multi-plant data analytics project provides a wider, comprehensive multi-year approach. A local process control data analytics effort involves PID loop tuning modifications.

- Answered questions suggest that careful use of data analytics can help fill the skills gap, meaning fill in for talent that’s retiring in manufacturing industries by capturing and applying knowledge.

Benefits of in-depth industrial data analysis include improved operations, capture and optimal application of tribal knowledge and agreement about how related investments will be applied, according to Laurie Cavanaugh, director of business development, E Tech Group, and Matt Ruth, president, Avanceon. The automation and process control system integrators discuss case studies that show the benefits of in-depth data analysis in this partial transcript from the April 21, 2022, RCEP PDH webcast (archived for 1 year), “Just enough industrial data analysis?“ This has been edited for clarity.

Related data analytics articles

Relate articles in this data analytics series are:

– Webcast preview: What is just enough industrial data analysis?

– Webcast Q&A: Questions answered: What is just enough industrial data analysis?

– Data analysis disruptors in the industrial space

– How to get started with industrial data analytics

– How to overcome obstacles to industrial data analysis

One company had an initiative called the connected shop floor. They wanted to go from the concept of connected shop floor to rolling it out across 16 of their 42 plants within a 24-month period. The 24 months includes some of the planning cycles.

Success for this industrial data analysis system integration project required having the right people at the right time and on the same page. Everybody from the executive suite, the CEO said, wanted this connected shop floor initiative to connect folks on the shop floor, with the data sources, information and knowledge about how to run effectively. The CEO had the five-year vision. He didn’t say, I need it tomorrow. He said, “I know I want to get there,” gave them a reasonable time period. They put core people in place. Everybody had a seat at the table, including engineering, information technology (IT) and operations. How were operational users engaged?

Some users in operations have tribal knowledge not collected in a centralized repository. Before getting operational users committed to this evolutionary effort, it was necessary to start with infrastructure, including safety. You really got to dig deep to say, what are the constraints? Where do we need to start?

If they knew that the infrastructure was part of that process, they had to do effective planning. They launched what was called an IT/operational technology (OT) assessment. That assessment involved all levels in the organization. It collected factual information so that they could feel where the vulnerabilities and risk were. How do we get empirical data? Let’s do it across all the plants and then focus on our top 16 sites. Topics included where are the managed or unmanaged switches to address cybersecurity, what’s on the network or not on the network, and what devices are discontinued or at the end of life.

A lot of this data was compiled, reviewed and prioritized. It’s not emotional at this point. It’s very factual, and everybody can look at the same sets of data across multiple facilities. By prioritizing, they could do a bottom-up budget. It’s not just throwing darts at a board, but a collaborative prioritization of efforts over a period of years.

The next step was the value proposition. Sharing that vision across the organization is crucial. Let’s have it funded by corporate instead of pushing that onus down to the facility and the operation.

Allocation of funds would be based on impact to the organization so nobody was left behind. It seemed fair to allocate based upon the return on investment (ROI) or the contribution back to the bottom line. There were no secrets about where the money’s being spent and how.

Finally, how do we execute this plan? They defined a set of core players and a preferred program and said, “Let’s just make sure these preferred integrators have all of the standards, and we are able to basically train, prepare and do parallel roll-outs across 16 sites.”

In addition, innovation is included in the feedback loop so even though we’re not collecting information, the process has its own set of data analytics, feedback loop and adjustment. That’s where the lessons-learned feedback comes in.

Cloud willingness, connecting operational technology data with business data and addressing cybersecurity concerns can be three technical considerations on the way to more effective industrial data analysis, according to Matt Ruth, president of Avanceon, in an April 21, 2022, webcast, “Just enough industrial data analysis?” and this partial transcript. Courtesy: CFE Media

Enabling warehouse data and factoring

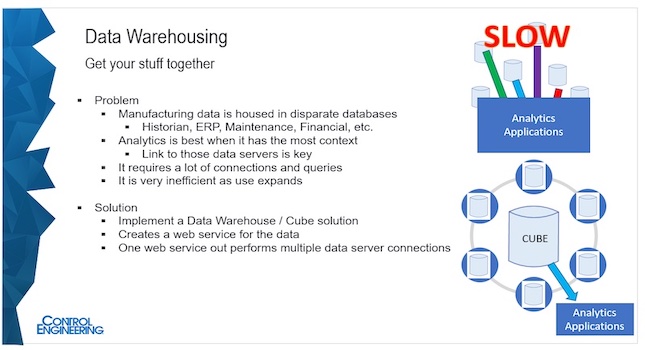

The first one is this concept of a data factory. That’s gathering information in a group. As I mentioned before, manufacturing data is housed in disparate databases for historian, enterprise resource planning (ERP), maintenance, financial and other areas, creating silos of data. They do not have connectivity, except some shared data transfers or shared tables.

Analytics are awesome with context. Analytics can learn the most about what’s going on from all of data sources when it can to absorb data and output useful information. To get the most out of data analytics, links to data servers are needed. It requires many connections and queries to be able to get information from different servers. Connections to ERP and other financial systems are especially difficult and rarely done. Instead, they lean on exported spreadsheets.

Integrating exported spreadsheets, but adding that burden is a challenge in today’s environment. As analytics applications expand, performance will decrease. Tying into a data warehouse function and a data factory solution takes inputs, transforms them into a product of data and provide an output.

A data factory creates different services and abilities to do fast speed contextuality with easy worker accessibility. Analytics applications can be connected to the data factory to streamline.

This creates clean data. A data-decision-driven factory allows some predictive and prescriptive activities to happen, and it makes simple analysis self-servable. It creates the ability for people to self-serve information and get smarter as they go.

Diagnostic case study

A second, diagnostic case study investigates and addresses process problems. Everybody that does any manufacturing process control leverages the use of a proportional-integral-derivative (PID) algorithm. PID algorithms are notorious for being difficult to understand and somewhat of a black art such that people don’t like to touch or modify them very much.

As a result, many PID loops tuned at a factory or system’s inception are how they run today. A large food and beverage manufacturer had such a problem. With very heavy demand on process systems, they realized that the clean-in-place (CIP) function was losing a huge amount of production time. When something is being cleaned, it’s not producing product.

Cleaning was going too long, but it seemed random and related to PID and control problems. To make it worse, new people in the facility didn’t have any context or understanding of what happened in the past.

Importance of data cleansing to PID analytics

A PID analytics project dove deeper into the information available in the process historian to understand where the problems were and analyze the big picture.

This analytics container is made of data collection connected to the historian. It also has some data organization to ensure data appears in the right columns, in the right areas, and in the right places. Most importantly, it does a lot of cleaning, filtering and testing. Cleaning means throwing out all the stuff that doesn’t mean anything, such as if the loop is running. Take events out of the picture and do data tests to mark when things do matter.

Then run the model and formulas to produce an output. In this case, the output provides a big picture of what was run.

Data cleansing, an important part of analytics, allows creation of a report card, in this case showing terrible performance across-the-board on different CPI circuits and all process parameters.

This report card enabled creation of a deep-dive narrative into why each loop was performing the way it was, how the circuits were performing, what the oscillation was and the performance of them were, what the degradation over the past two years was, interactions with other processes, circuits, modes, and operations to determine some multimodal combinations.

At the end of the day, this analysis allowed the customer to uncover significant issues with programmable logic controller (PLC) code and how they operated the system. It also identified various and unrelated scenarios where the CIP system would perform terribly. After control code was modified, CIP performance time improved about 5%.

That might not seem like a lot, but when cleaning requires six hours a day, a 5% improved translated into an ROI of less than 3 months. In this case, analytics answered the “Why did it happen” question, which is a good way to use analytics in a process environment.

Two paths to applying data analytics

There are two paths to just right. The first one is to develop a path along the way. It’s a long-term vision, a lot of sponsorship and requires execution against that. The second path is the way without as much vision into the organization: Identify the data, figure out how to get data into a warehouse or repository, scrape up some reports and dashboards, get that descriptive thing going, so your team can learn to figure out how to refine that information and define and expand more use cases, dig into more descriptive, diagnostic, predictive and prescriptive opportunities inside analytics and expand use. Then integrate the analytics as you see fit into your overall processes to continue to improve the performance of systems.

Webcast questions and answers on industrial data analytics

During the webcast, the presenters answered some questions, below. See separate article linked above for additional answers.

Q: How will retirement of long-tenured staff and their tribal knowledge is expediting the importance of diagnostics and analytics for the next generation of plant engineers?

Ruth: You have to figure out how to gather this information and get it up to folks, to be able to easily visualize problems. Diagnostic analytics is key to being able to expose things. Take the opportunity, as folks are retiring, to understand those rules and paths to expose that information. I see data analytics having a huge part in that transition as the baby boomers retire and as their expertise leaves the facility, as people pursue other careers. Data analytics make those transitions easier to manage.

Cavanaugh: The other part of that is content delivery. Everybody is consuming information differently now outside the industrial workspace. They’re used to trying to find a YouTube video to explain how to do something. We must start delivering some of that knowledge and information in context using the delivery methods that younger generations and the rest of us use to inform behavior. Be creative and think about the delivery, user interface and the user experience.

Q: Once the decision has been made to use data analytics, is it difficult to agree on project goals? What does success look like?

Cavanaugh: I think it returns to the questions we are trying to solve. What has the biggest impact because what people are going to rally around is a big win, or maybe even a small win, but maybe it’s a win nonetheless. It says, we saw this, we identified the problem as a team, with an identifiable return on investment. It affected multiple people. Let’s pick this. Let this be the example, the pilot, the thing that we rally around next time something is tough, when we’re trying to solve a different problem.

– Edited by Tyler Wall, assistant editor, CFE Media and Technology, twall@cfemedia.com, from a Control Engineering RCEP/PDH development hour webcast.

KEYWORDS

Industrial data analytics applications

CONSIDER THIS

Are you using data analytics to your best advantage?

ONLINE

https://www.controleng.com/webcasts/just-enough-industrial-data-analysis/

Do you have experience and expertise with the topics mentioned in this content? You should consider contributing to our CFE Media editorial team and getting the recognition you and your company deserve. Click here to start this process.